from matplotlib import pyplot as plt

import numpy as np

import pandas as pd

import geopandas as gpd

import hvplot.pandas

import seaborn as sns

import requests

np.random.seed(42)Lecture 13B: Predictive modeling with scikit-learn, continued

pd.options.display.max_columns = 999- Dec 4, 2023

- Section 401

Picking up where we left off

We’ll start by recapping the exercise from last lecture: adding additional distance-based features to our housing price model…

Spatial amenity/disamenity features

The strategy

- Get the data for a certain type of amenity, e.g., restaurants, bars, or disamenity, e.g., crimes

- Data sources: 311 requests, crime incidents, Open Street Map

- Use scikit learn’s nearest neighbor algorithm to calculate the distance from each sale to its nearest neighbor in the amenity/disamenity datasets

Examples of new possible features…

Distance from each sale to:

- Graffiti 311 Calls (last lecture)

- Subway Stops (last lecture)

- Universities

- Parks

- City Hall

- New Construction Permits

- Aggravated Assaults

- Abandoned Vehicle 311 Calls

# Models

from sklearn.linear_model import LinearRegression

from sklearn.ensemble import RandomForestRegressor

# Model selection

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

# Pipelines

from sklearn.pipeline import make_pipeline

# Preprocessing

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler, PolynomialFeatures, OneHotEncoder

# Neighbors

from sklearn.neighbors import NearestNeighborsdef get_carto_data(table_name, where=None, limit=None):

"""

Download data from CARTO given a specific table name and

optionally a where statement or limit.

"""

# the CARTO API url

carto_url = "https://phl.carto.com/api/v2/sql"

# Create the query

query = f"SELECT * FROM {table_name}"

# Add a where

if where is not None:

query = query + f" WHERE {where}"

# Add a limit

if limit is not None:

query = query + f" LIMIT {limit}"

# Make the request

params = {"q": query, "format": "geojson"}

response = requests.get(carto_url, params=params)

# Make the GeoDataFrame

return gpd.GeoDataFrame.from_features(response.json(), crs="EPSG:4326")Load and clean the data using the utility function above:

# the CARTO API url

carto_url = "https://phl.carto.com/api/v2/sql"

# The table name

table_name = "opa_properties_public"

# Only pull 2022 sales for single family residential properties

where = "sale_date >= '2022-01-01' AND sale_date < '2023-01-01'"

where = where + " AND category_code_description IN ('SINGLE FAMILY', 'Single Family')"

# Run the query

salesRaw = get_carto_data(table_name, where=where)

# Optional: put it a reproducible order for test/training splits later

salesRaw = salesRaw.sort_values("parcel_number")

# The feature columns we want to use

cols = [

"sale_price",

"total_livable_area",

"total_area",

"garage_spaces",

"fireplaces",

"number_of_bathrooms",

"number_of_bedrooms",

"number_stories",

"exterior_condition",

"zip_code",

"geometry"

]

# Trim to these columns and remove NaNs

sales = salesRaw[cols].dropna()

# Trim zip code to only the first five digits

sales['zip_code'] = sales['zip_code'].astype(str).str.slice(0, 5)

# Trim very low and very high sales

valid = (sales['sale_price'] > 3000) & (sales['sale_price'] < 1e6)

sales = sales.loc[valid]len(sales)17675Add new distance-based features:

def get_xy_from_geometry(df):

"""

Return a numpy array with two columns, where the

first holds the `x` geometry coordinate and the second

column holds the `y` geometry coordinate

Note: this works with both Point() and Polygon() objects.

"""

# NEW: use the centroid.x and centroid.y to support Polygon() and Point() geometries

x = df.geometry.centroid.x

y = df.geometry.centroid.y

return np.column_stack((x, y)) # stack as columns# Convert to meters and EPSG=3857

sales_3857 = sales.to_crs(epsg=3857)

# Extract x/y for sales

salesXY = get_xy_from_geometry(sales_3857)New feature: 311 Graffiti Calls

Source: https://www.opendataphilly.org/dataset/311-service-and-information-requests

Download the data:

# Select only those for grafitti and in 2022

where = "requested_datetime >= '01-01-2022' and requested_datetime < '01-01-2023'"

where = where + " AND service_name = 'Graffiti Removal'"

# Pull the subset we want

graffiti = get_carto_data("public_cases_fc", where=where)

# Remove rows with missing geometries

not_missing = ~graffiti.geometry.is_empty & graffiti.geometry.notna()

graffiti = graffiti.loc[not_missing]Get the x/y coordinates for the grafitti calls:

# Convert to meters in EPSG=3857

graffiti_3857 = graffiti.to_crs(epsg=3857)

# Extract x/y for grafitti calls

graffitiXY = get_xy_from_geometry(graffiti_3857)Run the neighbors algorithm to calculate the new feature:

# STEP 1: Initialize the algorithm

nbrs = NearestNeighbors(n_neighbors=5)

# STEP 2: Fit the algorithm on the "neighbors" dataset

nbrs.fit(graffitiXY)

# STEP 3: Get distances for sale to neighbors

grafDists, grafIndices = nbrs.kneighbors(salesXY)

# STEP 4: Get average distance to neighbors

avgGrafDist = grafDists.mean(axis=1)

# Set zero distances to be small, but nonzero

# IMPORTANT: THIS WILL AVOID INF DISTANCES WHEN DOING THE LOG

avgGrafDist[avgGrafDist==0] = 1e-5

# STEP 5: Add the new feature

sales['logDistGraffiti'] = np.log10(avgGrafDist)New feature: Subway stops

Use the osmnx package to get subway stops in Philly — we can use the ox.geometries_from_polygon() function.

- To select subway stations, we can use

station=subway: see the OSM Wikipedia - See Lecture 5A for a reminder on osmnx!

import osmnx as oxGet the geometry polygon for the Philadelphia city limits:

# Download the Philadelphia city limits

url = "https://opendata.arcgis.com/datasets/405ec3da942d4e20869d4e1449a2be48_0.geojson"

city_limits = gpd.read_file(url).to_crs(epsg=3857)

# Get the geometry from the city limits

city_limits_outline = city_limits.to_crs(epsg=4326).squeeze().geometrycity_limits_outlineUse osmnx to query OpenStreetMap to get all subway stations within the city limits:

# Get the subway stops within the city limits

subway = ox.features_from_polygon(city_limits_outline, tags={"station": "subway"})

# Convert to 3857 (meters)

subway = subway.to_crs(epsg=3857)/Users/nhand/mambaforge/envs/musa-550-fall-2023/lib/python3.10/site-packages/shapely/predicates.py:798: RuntimeWarning: invalid value encountered in intersects

return lib.intersects(a, b, **kwargs)

/Users/nhand/mambaforge/envs/musa-550-fall-2023/lib/python3.10/site-packages/shapely/set_operations.py:340: RuntimeWarning: invalid value encountered in union

return lib.union(a, b, **kwargs)Get the distance to the nearest subway station (\(k=1\)):

# STEP 1: x/y coordinates of subway stops (in EPGS=3857)

subwayXY = get_xy_from_geometry(subway.to_crs(epsg=3857))

# STEP 2: Initialize the algorithm

nbrs = NearestNeighbors(n_neighbors=1)

# STEP 3: Fit the algorithm on the "neighbors" dataset

nbrs.fit(subwayXY)

# STEP 4: Get distances for sale to neighbors

subwayDists, subwayIndices = nbrs.kneighbors(salesXY)

# STEP 5: add back to the original dataset

sales["logDistSubway"] = np.log10(subwayDists.mean(axis=1))sales.head()| sale_price | total_livable_area | total_area | garage_spaces | fireplaces | number_of_bathrooms | number_of_bedrooms | number_stories | exterior_condition | zip_code | geometry | logDistGraffiti | logDistSubway | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 783 | 450000 | 1785.0 | 1625.0 | 1.0 | 0.0 | 2.0 | 3.0 | 3.0 | 4 | 19147 | POINT (-75.14860 39.93145) | 2.097791 | 3.337322 |

| 12155 | 670000 | 2244.0 | 1224.0 | 0.0 | 0.0 | 3.0 | 4.0 | 3.0 | 3 | 19147 | POINT (-75.14817 39.93101) | 2.215476 | 3.350337 |

| 7348 | 790000 | 2514.0 | 1400.0 | 1.0 | 0.0 | 0.0 | 3.0 | 2.0 | 1 | 19147 | POINT (-75.14781 39.93010) | 2.194509 | 3.362296 |

| 2221 | 195000 | 1358.0 | 840.0 | 0.0 | 0.0 | 2.0 | 3.0 | 3.0 | 4 | 19147 | POINT (-75.14887 39.93026) | 2.212881 | 3.339347 |

| 12129 | 331000 | 868.0 | 546.0 | 0.0 | 0.0 | 2.0 | 2.0 | 2.0 | 3 | 19147 | POINT (-75.14881 39.93012) | 2.206706 | 3.340635 |

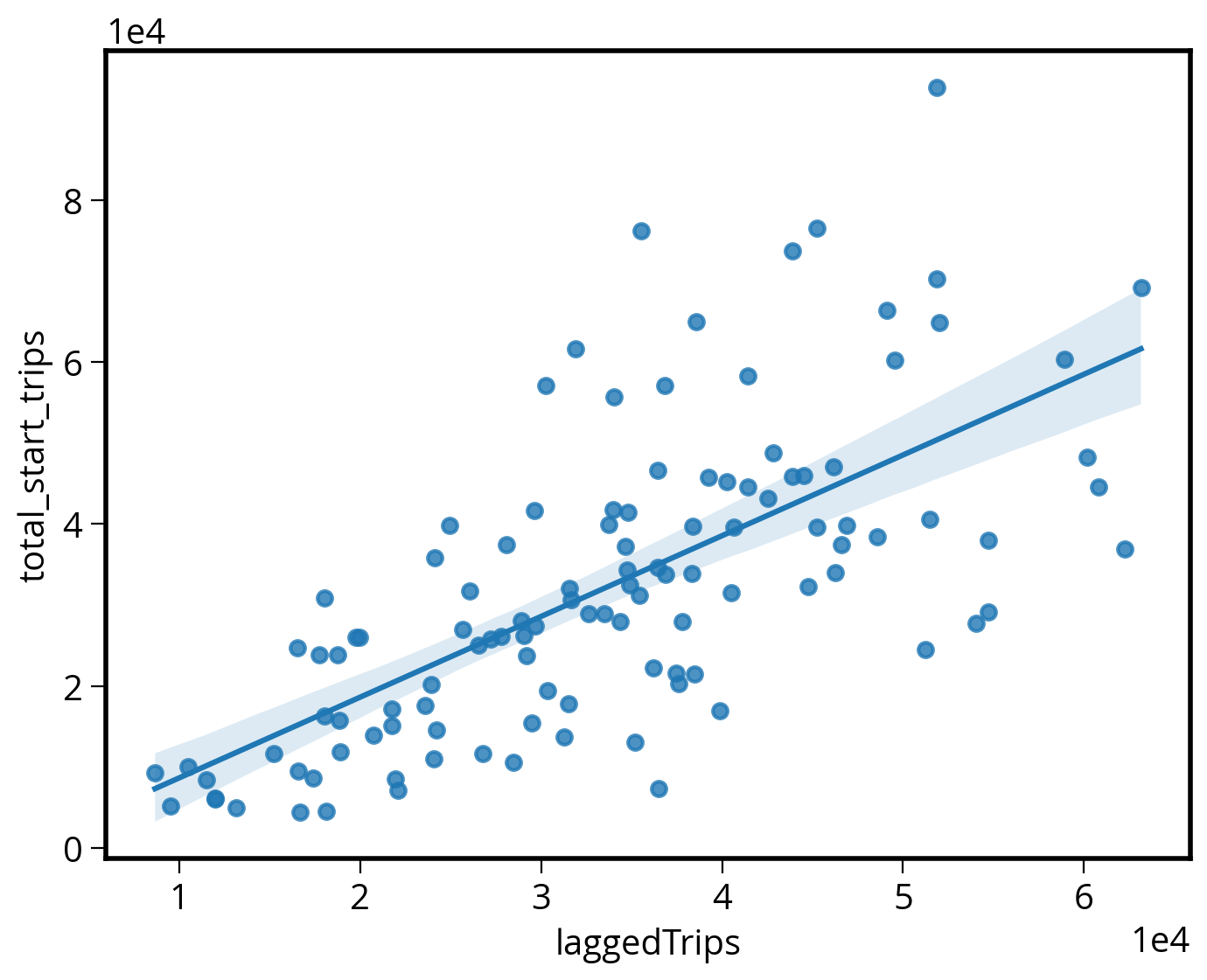

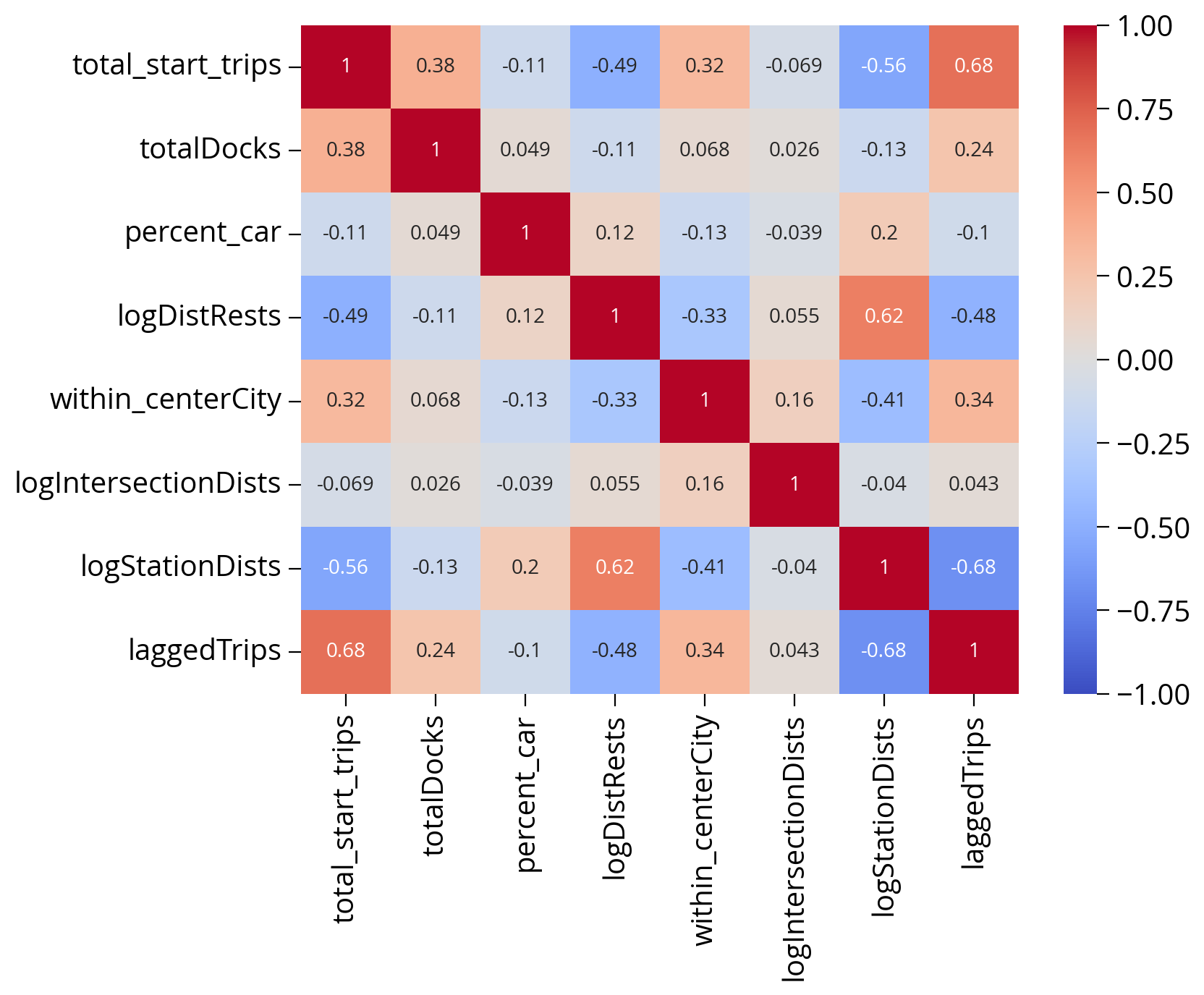

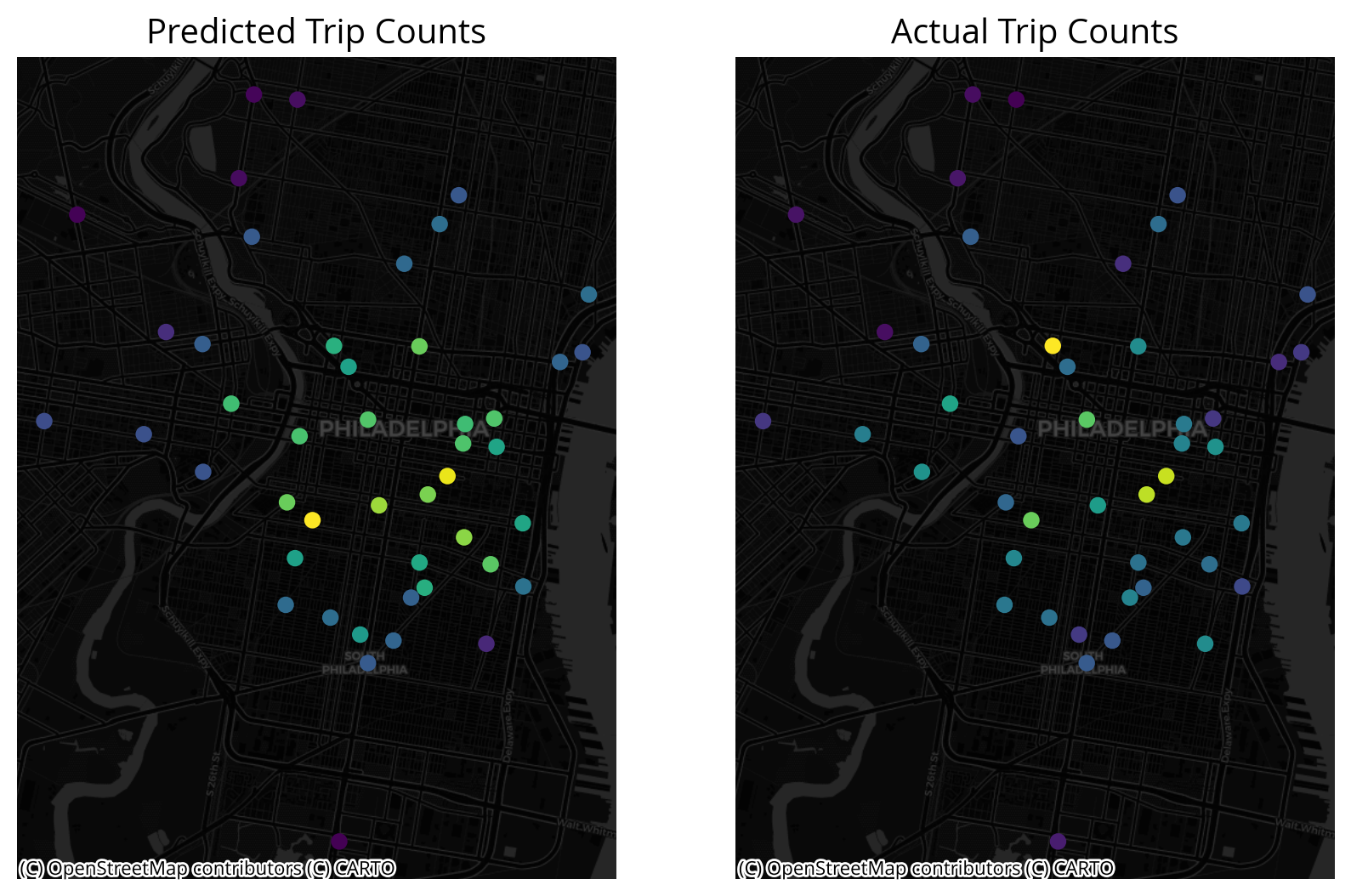

Now, let’s run the model…

# Numerical columns

num_cols = [

"total_livable_area",

"total_area",

"garage_spaces",

"fireplaces",

"number_of_bathrooms",

"number_of_bedrooms",

"number_stories",

"logDistGraffiti", # NEW

"logDistSubway" # NEW

]

# Categorical columns

cat_cols = ["exterior_condition", "zip_code"]# Set up the column transformer with two transformers

transformer = ColumnTransformer(

transformers=[

("num", StandardScaler(), num_cols),

("cat", OneHotEncoder(handle_unknown="ignore"), cat_cols),

]

)# Initialize the pipeline

# NOTE: only use 20 estimators here so it will run in a reasonable time

pipe = make_pipeline(

transformer, RandomForestRegressor(n_estimators=20, random_state=42)

)# Split the data 70/30

train_set, test_set = train_test_split(sales, test_size=0.3, random_state=42)

# the target labels

y_train = np.log(train_set["sale_price"])

y_test = np.log(test_set["sale_price"])# Fit the training set

# REMINDER: use the training dataframe objects here rather than numpy array

pipe.fit(train_set, y_train);# What's the test score?

# REMINDER: use the test dataframe rather than numpy array

pipe.score(test_set, y_test)0.5818673189789565Can we improve on this?

Exercise from last lecture: How about other spatial features?

- I’ve listed out several other types of potential sources of new distance-based features from OpenDataPhilly

- Choose a few and add new features

- Re-fit the model and evalute the performance on the test set and feature importances

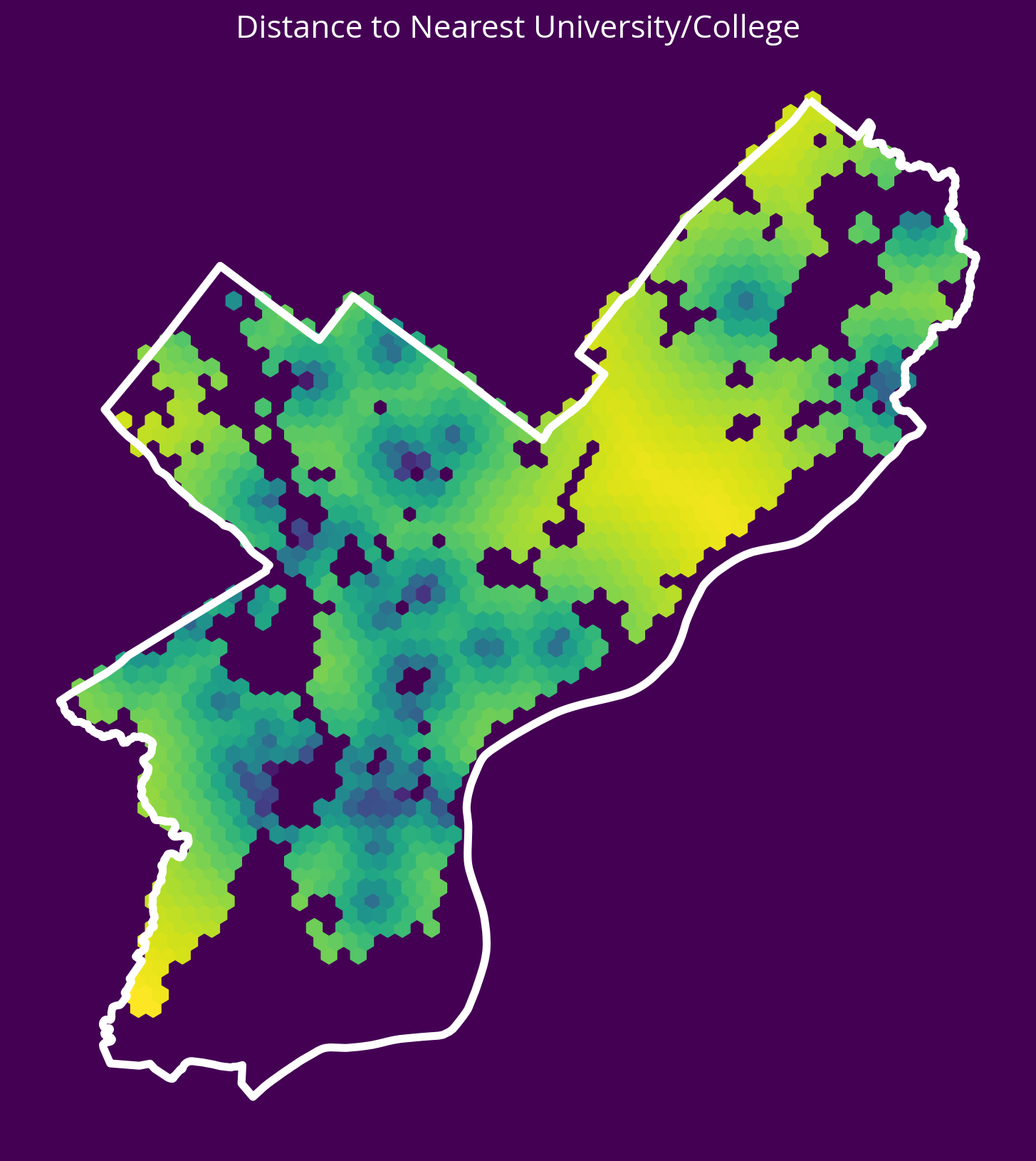

1. Universities

New feature: Distance to the nearest university/college

- Source: OpenDataPhilly

- GeoJSON URL

# Get the data

url = "https://opendata.arcgis.com/api/v3/datasets/8ad76bc179cf44bd9b1c23d6f66f57d1_0/downloads/data?format=geojson&spatialRefId=4326"

univs = gpd.read_file(url)

# Get the X/Y

univXY = get_xy_from_geometry(univs.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=1)

nbrs.fit(univXY)

univDists, _ = nbrs.kneighbors(salesXY)

# Add the new feature

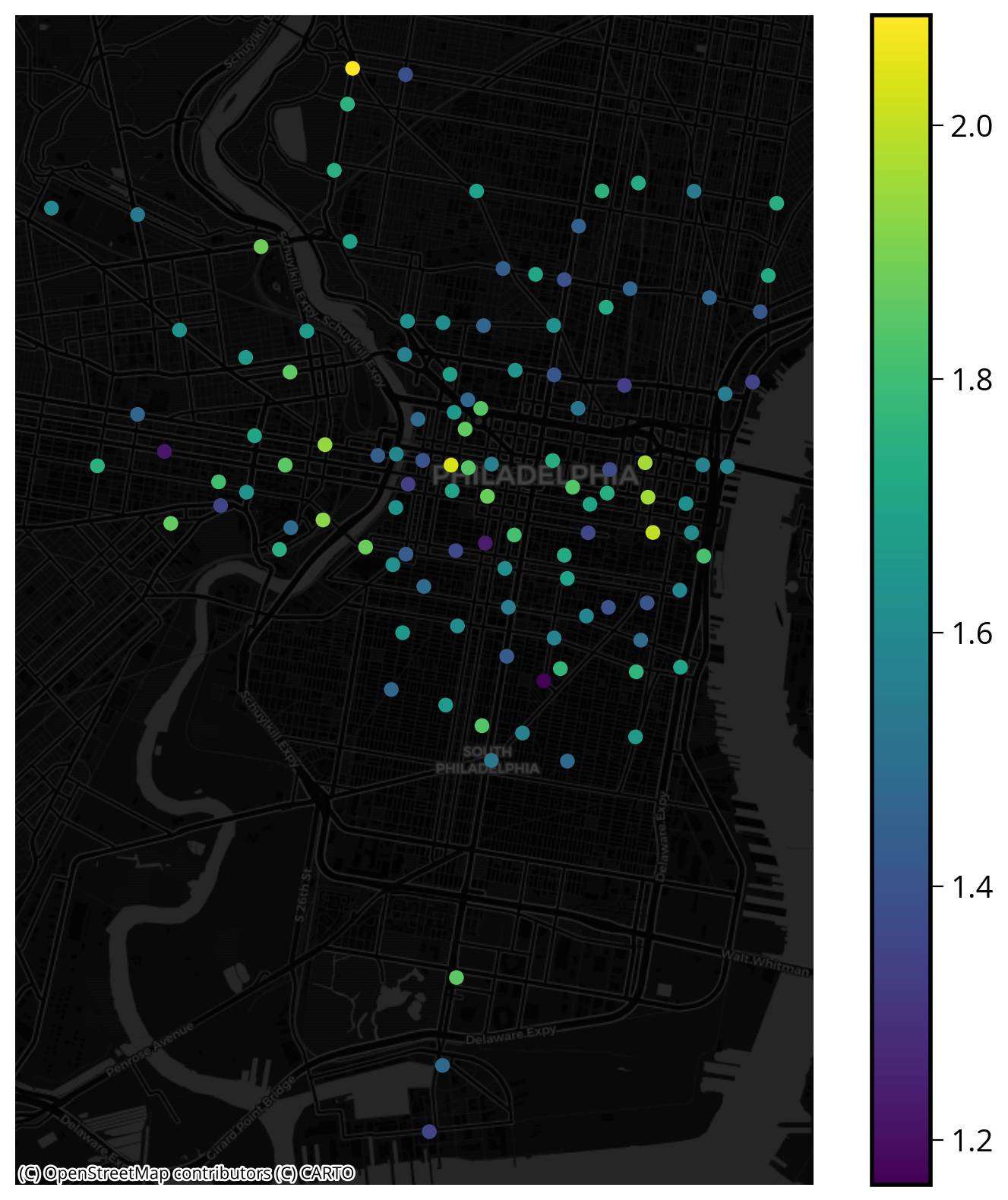

sales['logDistUniv'] = np.log10(univDists.mean(axis=1))fig, ax = plt.subplots(figsize=(10,10), facecolor=plt.get_cmap('viridis')(0))

x = salesXY[:,0]

y = salesXY[:,1]

ax.hexbin(x, y, C=np.log10(univDists.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor='none', edgecolor='white', linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title("Distance to Nearest University/College", fontsize=16, color='white');

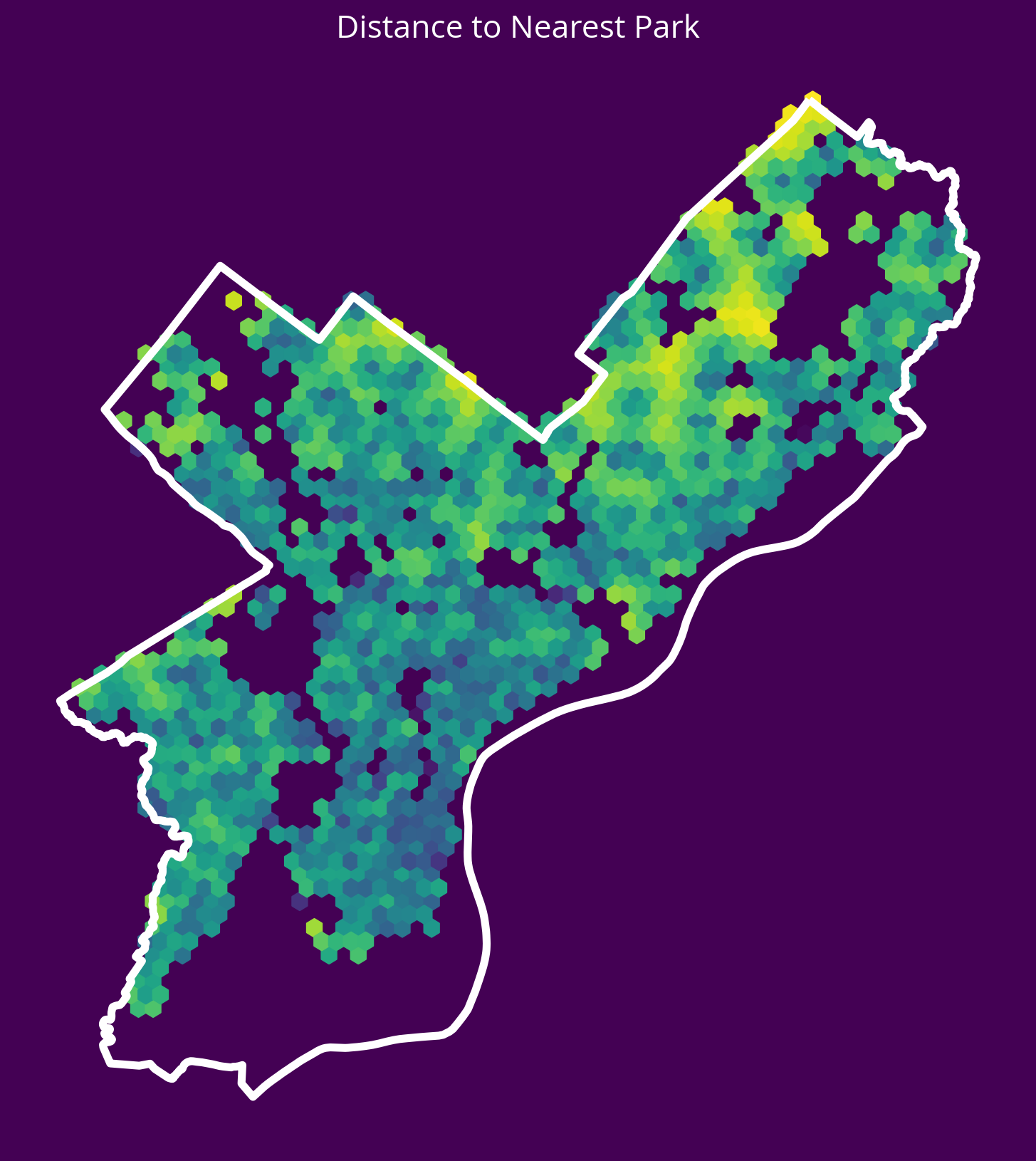

2. Parks

New feature: Distance to the nearest park centroid

- Source: OpenDataPhilly

- GeoJSON URL

Notes - The park geometries are polygons, so you’ll need to get the x and y coordinates of the park centroids and calculate the distance to these centroids. - You can use the geometry.centroid.x and geometry.centroid.y values to access these coordinates.

# Get the data

url = "https://opendata.arcgis.com/datasets/d52445160ab14380a673e5849203eb64_0.geojson"

parks = gpd.read_file(url)

# Get the X/Y

parksXY = get_xy_from_geometry(parks.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=1)

nbrs.fit(parksXY)

parksDists, _ = nbrs.kneighbors(salesXY)

# Add the new feature

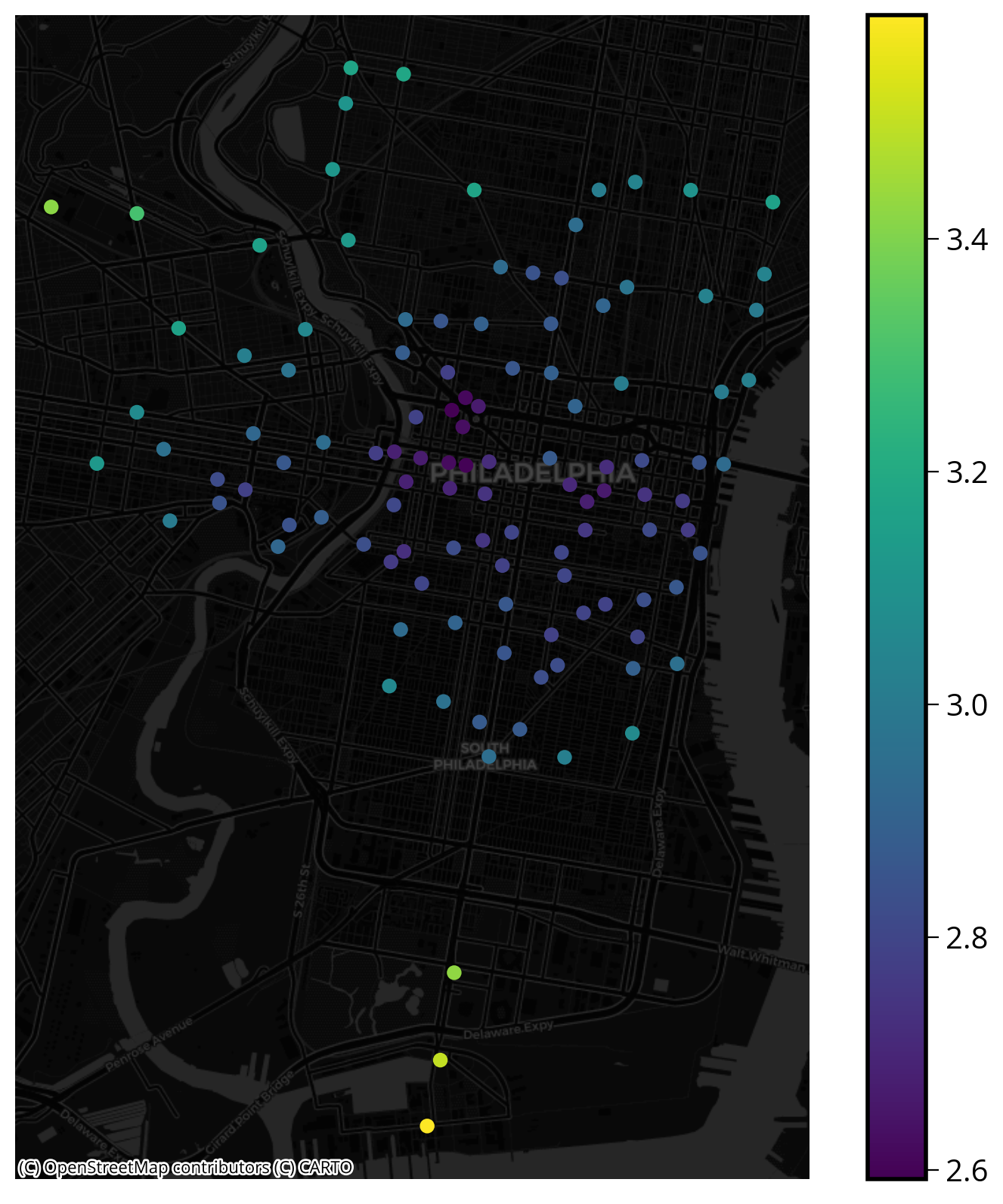

sales["logDistParks"] = np.log10(parksDists.mean(axis=1))fig, ax = plt.subplots(figsize=(10, 10), facecolor=plt.get_cmap("viridis")(0))

x = salesXY[:, 0]

y = salesXY[:, 1]

ax.hexbin(x, y, C=np.log10(parksDists.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor="none", edgecolor="white", linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title("Distance to Nearest Park", fontsize=16, color="white");

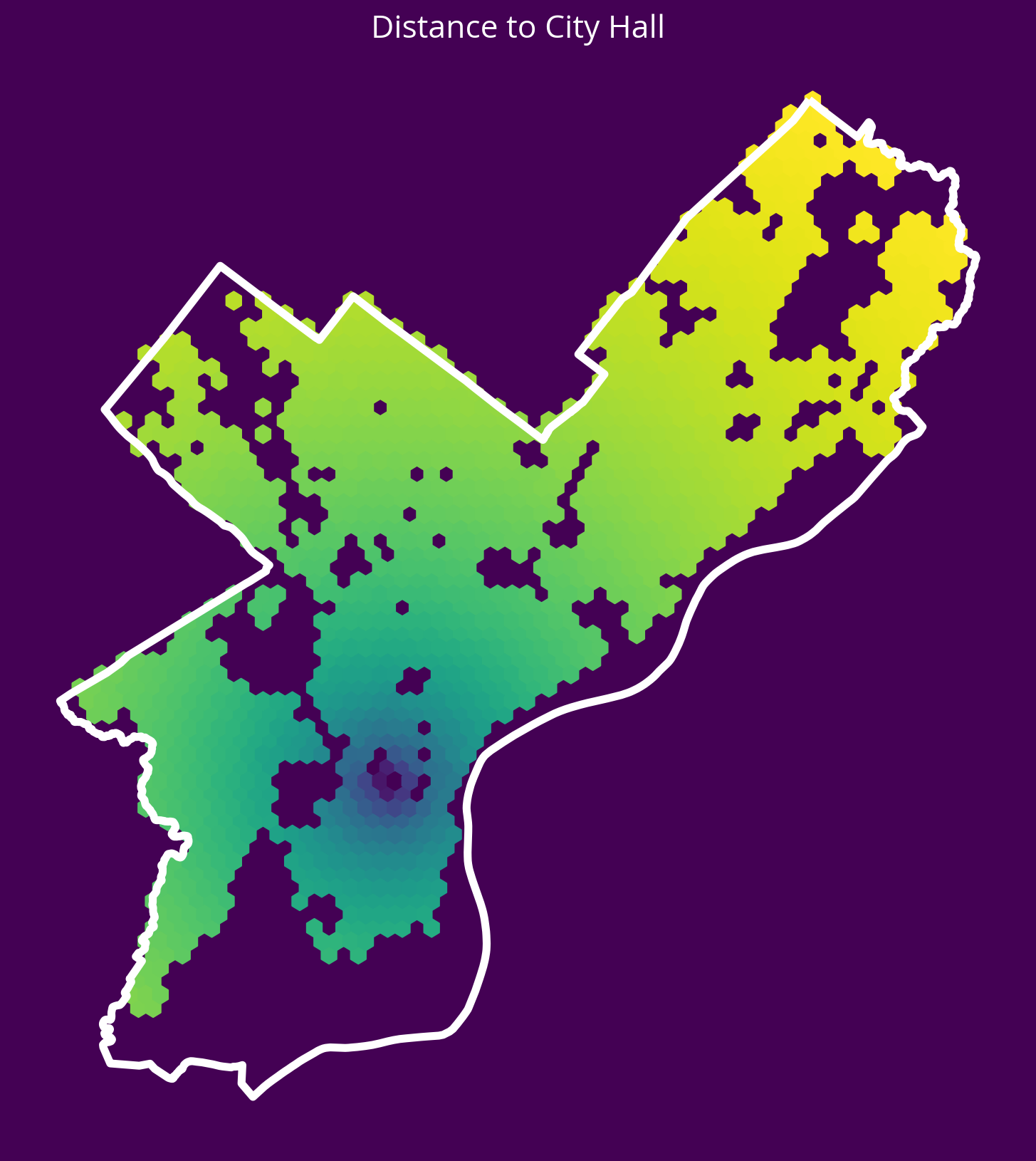

3. City Hall

New feature: Distance to City Hall.

- Source: OpenDataPhilly

- GeoJSON URL

Notes

- To identify City Hall, you’ll need to pull data where “NAME=‘City Hall’” and “FEAT_TYPE=‘Municipal Building’”

- As with the parks, the geometry will be a polygon, so you should calculate the distance to the centroid of the City Hall polygon

# Get the data

url = "http://data-phl.opendata.arcgis.com/datasets/5146960d4d014f2396cb82f31cd82dfe_0.geojson"

landmarks = gpd.read_file(url)

# Trim to City Hall

cityHall = landmarks.query("NAME == 'City Hall' and FEAT_TYPE == 'Municipal Building'")# Get the X/Y

cityHallXY = get_xy_from_geometry(cityHall.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=1)

nbrs.fit(cityHallXY)

cityHallDist, _ = nbrs.kneighbors(salesXY)

# Add the new feature

sales["logDistCityHall"] = np.log10(cityHallDist.mean(axis=1))fig, ax = plt.subplots(figsize=(10, 10), facecolor=plt.get_cmap("viridis")(0))

x = salesXY[:, 0]

y = salesXY[:, 1]

ax.hexbin(x, y, C=np.log10(cityHallDist.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor="none", edgecolor="white", linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title("Distance to City Hall", fontsize=16, color="white");

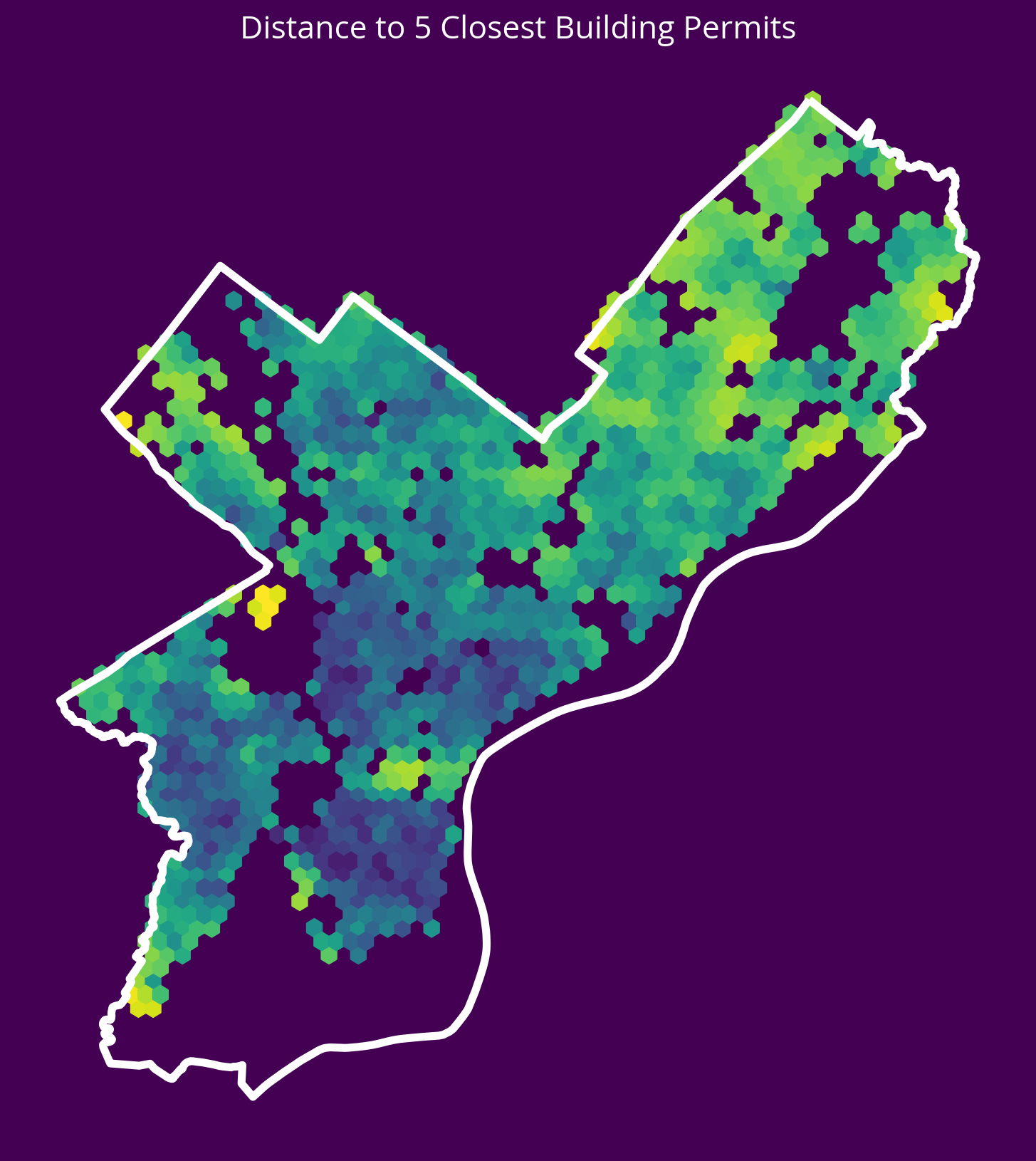

4. Residential Construction Permits

New feature: Distance to the 5 nearest residential construction permits from 2022

- Source: OpenDataPhilly

- CARTO table name: “permits”

Notes

- You can pull new construction permits only by selecting where

permitdescriptionequals ‘RESIDENTRIAL CONSTRUCTION PERMIT’ - You can select permits from only 2022 using the

permitissuedatecolumn

# Table name

table_name = "permits"

# Where clause

where = "permitissuedate >= '2022-01-01' AND permitissuedate < '2023-01-01'"

where = where + " AND permitdescription='RESIDENTIAL BUILDING PERMIT'"

# Query

permits = get_carto_data(table_name, where=where)

# Remove missing

not_missing = ~permits.geometry.is_empty & permits.geometry.notna()

permits = permits.loc[not_missing]

# Get the X/Y

permitsXY = get_xy_from_geometry(permits.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=5)

nbrs.fit(permitsXY)

permitsDist, _ = nbrs.kneighbors(salesXY)

# Add the new feature

sales["logDistPermits"] = np.log10(permitsDist.mean(axis=1))/var/folders/49/ntrr94q12xd4rq8hqdnx96gm0000gn/T/ipykernel_97029/3972340687.py:12: UserWarning: GeoSeries.notna() previously returned False for both missing (None) and empty geometries. Now, it only returns False for missing values. Since the calling GeoSeries contains empty geometries, the result has changed compared to previous versions of GeoPandas.

Given a GeoSeries 's', you can use '~s.is_empty & s.notna()' to get back the old behaviour.

To further ignore this warning, you can do:

import warnings; warnings.filterwarnings('ignore', 'GeoSeries.notna', UserWarning)

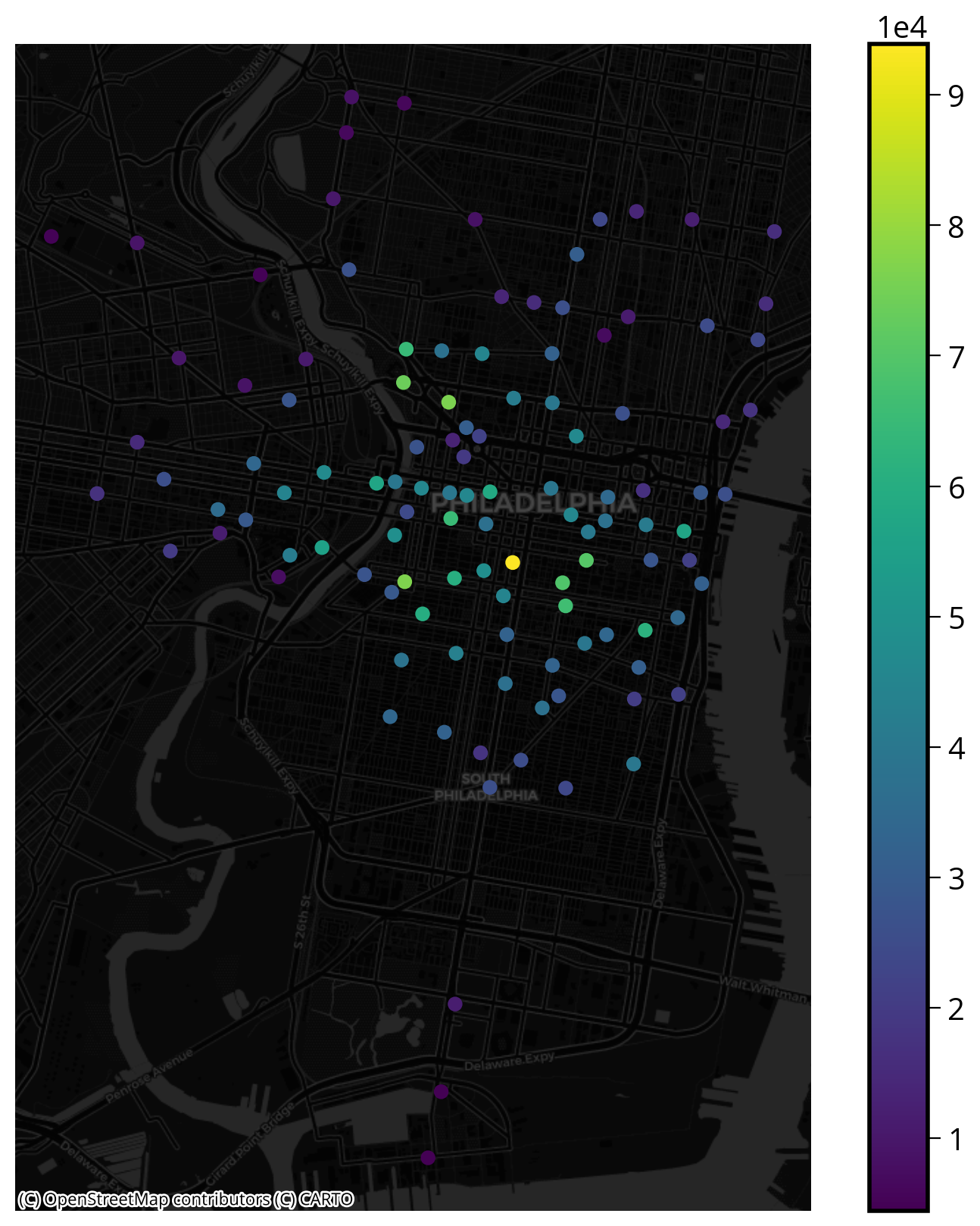

not_missing = ~permits.geometry.is_empty & permits.geometry.notna()fig, ax = plt.subplots(figsize=(10, 10), facecolor=plt.get_cmap("viridis")(0))

x = salesXY[:, 0]

y = salesXY[:, 1]

ax.hexbin(x, y, C=np.log10(permitsDist.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor="none", edgecolor="white", linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title("Distance to 5 Closest Building Permits", fontsize=16, color="white");

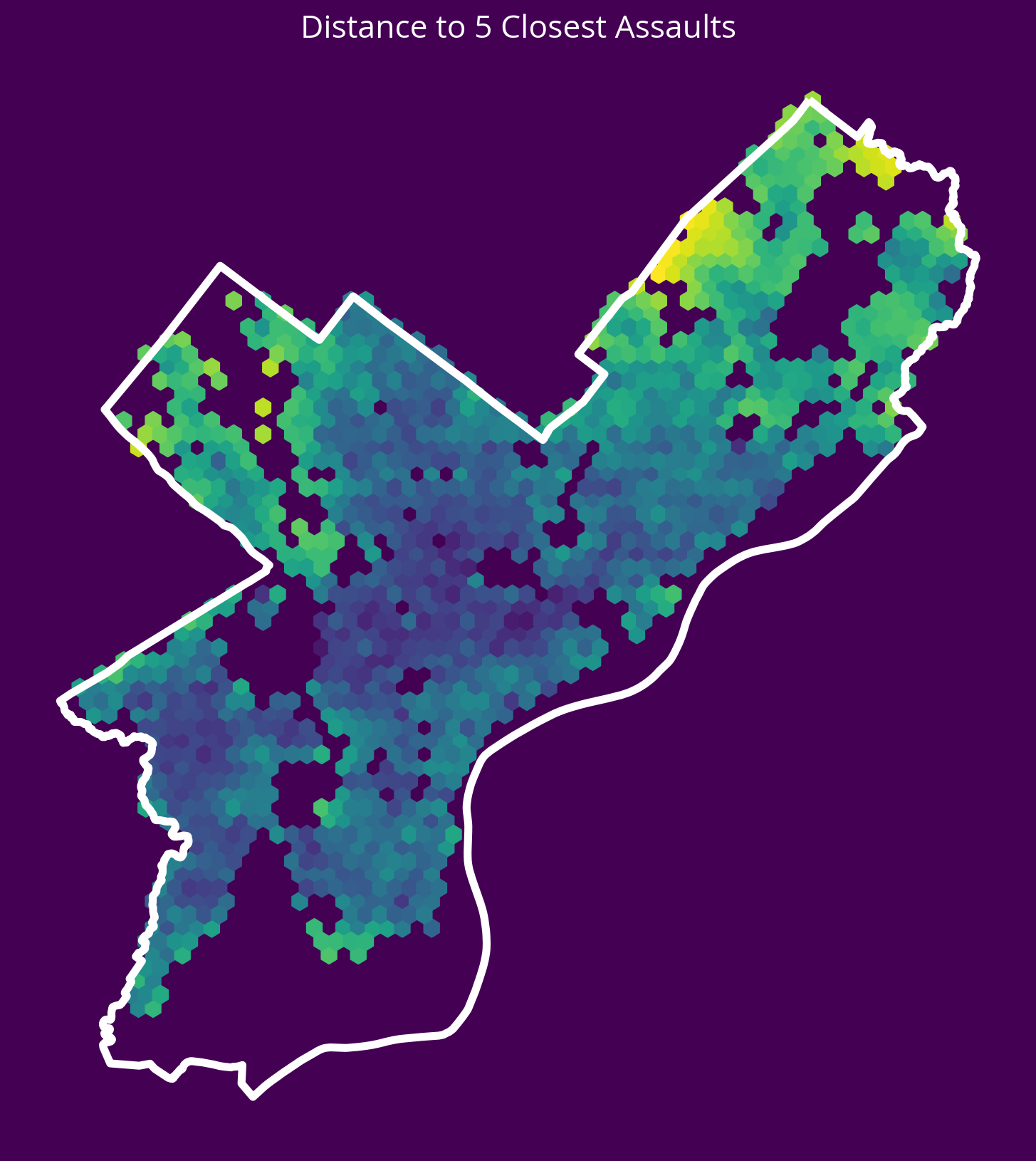

5. Aggravated Assaults

New feature: Distance to the 5 nearest aggravated assaults in 2022

- Source: OpenDataPhilly

- CARTO table name: “incidents_part1_part2”

Notes

- You can pull aggravated assaults only by selecting where

Text_General_Codeequals ‘Aggravated Assault No Firearm’ or ‘Aggravated Assault Firearm’ - You can select crimes from only 2022 using the

dispatch_datecolumn

# Table name

table_name = "incidents_part1_part2"

# Where selection

where = "dispatch_date >= '2022-01-01' AND dispatch_date < '2023-01-01'"

where = where + " AND Text_General_Code IN ('Aggravated Assault No Firearm', 'Aggravated Assault Firearm')"

# Query

assaults = get_carto_data(table_name, where=where)

# Remove missing

not_missing = ~assaults.geometry.is_empty & assaults.geometry.notna()

assaults = assaults.loc[not_missing]

# Get the X/Y

assaultsXY = get_xy_from_geometry(assaults.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=5)

nbrs.fit(assaultsXY)

assaultDists, _ = nbrs.kneighbors(salesXY)

# Add the new feature

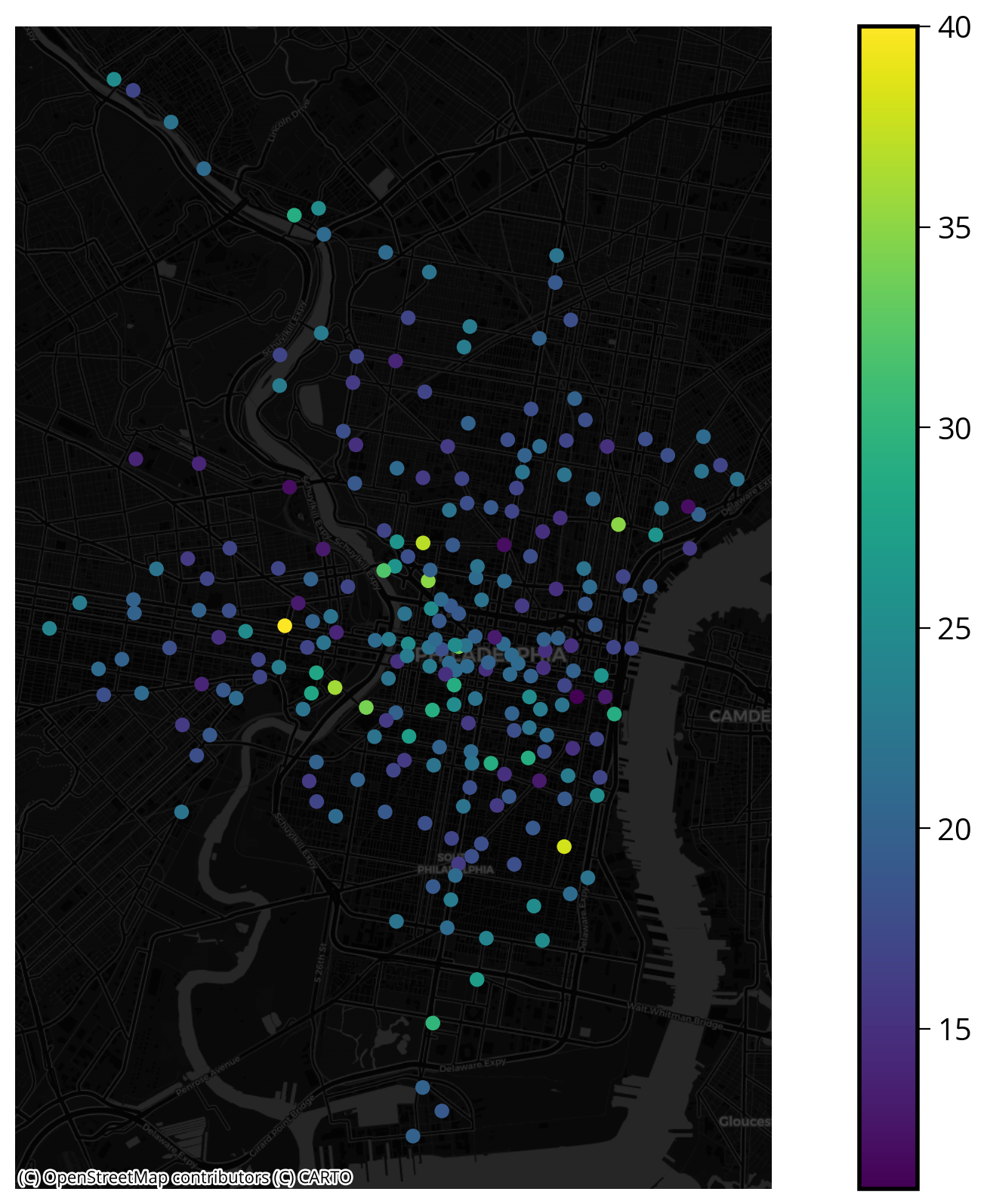

sales['logDistAssaults'] = np.log10(assaultDists.mean(axis=1))fig, ax = plt.subplots(figsize=(10, 10), facecolor=plt.get_cmap("viridis")(0))

x = salesXY[:, 0]

y = salesXY[:, 1]

ax.hexbin(x, y, C=np.log10(assaultDists.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor="none", edgecolor="white", linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title("Distance to 5 Closest Assaults", fontsize=16, color="white");

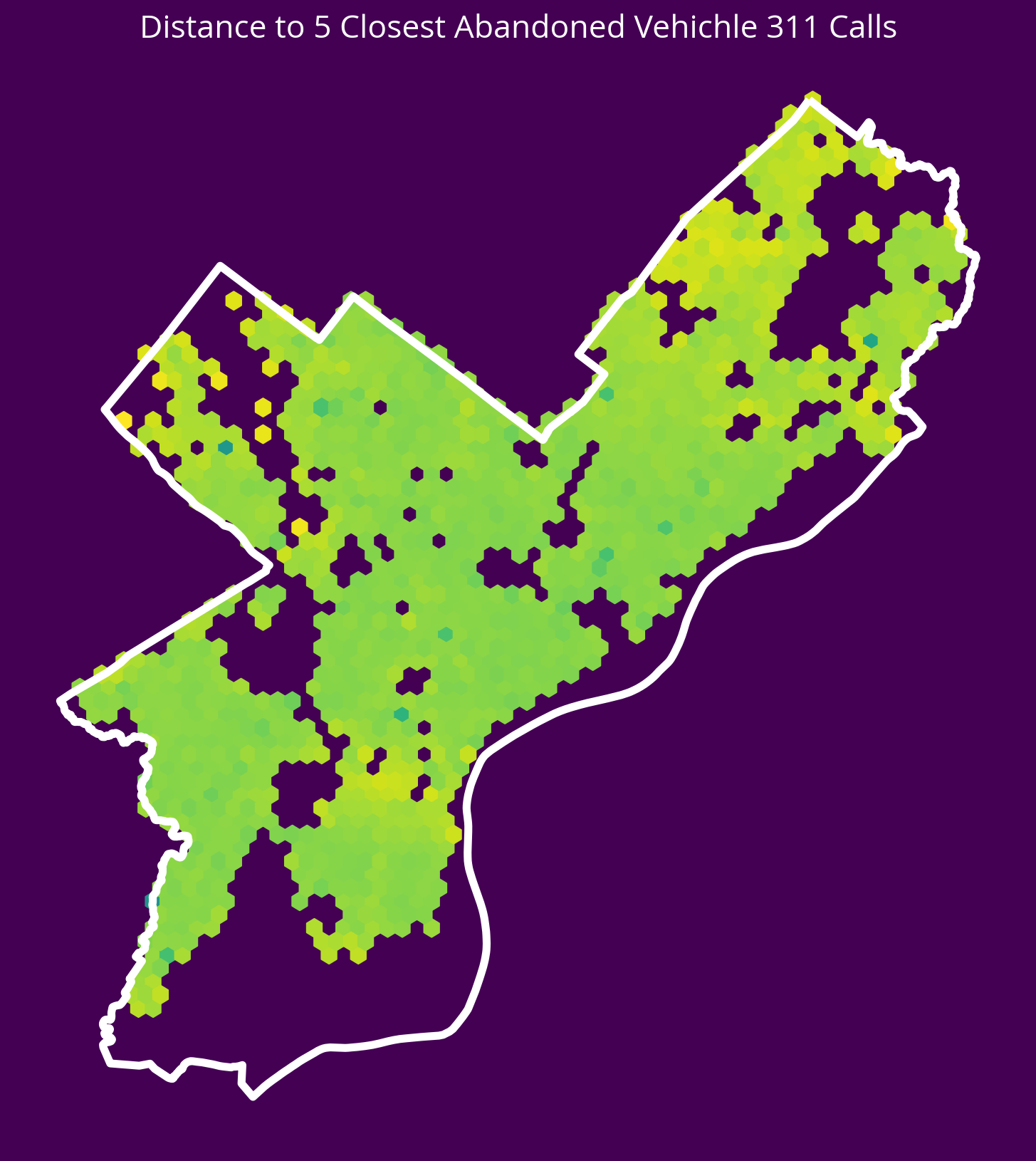

6. Abandonded Vehicle 311 Calls

New feature: Distance to the 5 nearest abandoned vehicle 311 calls in 2022

- Source: OpenDataPhilly

- CARTO table name: “public_cases_fc”

Notes

- You can pull abandonded vehicle calls only by selecting where

service_nameequals ‘Abandoned Vehicle’ - You can select crimes from only 2022 using the

requested_datetimecolumn

# Table name

table_name = "public_cases_fc"

# Where selection

where = "requested_datetime >= '2022-01-01' AND requested_datetime < '2023-01-01'"

where = "service_name = 'Abandoned Vehicle'"

# Query

cars = get_carto_data(table_name, where=where)

# Remove missing

not_missing = ~cars.geometry.is_empty & cars.geometry.notna()

cars = cars.loc[not_missing]

# Get the X/Y

carsXY = get_xy_from_geometry(cars.to_crs(epsg=3857))

# Run the k nearest algorithm

nbrs = NearestNeighbors(n_neighbors=5)

nbrs.fit(carsXY)

carDists, _ = nbrs.kneighbors(salesXY)

# Handle any sales that have 0 distances

carDists[carDists == 0] = 1e-5 # a small, arbitrary value

# Add the new feature

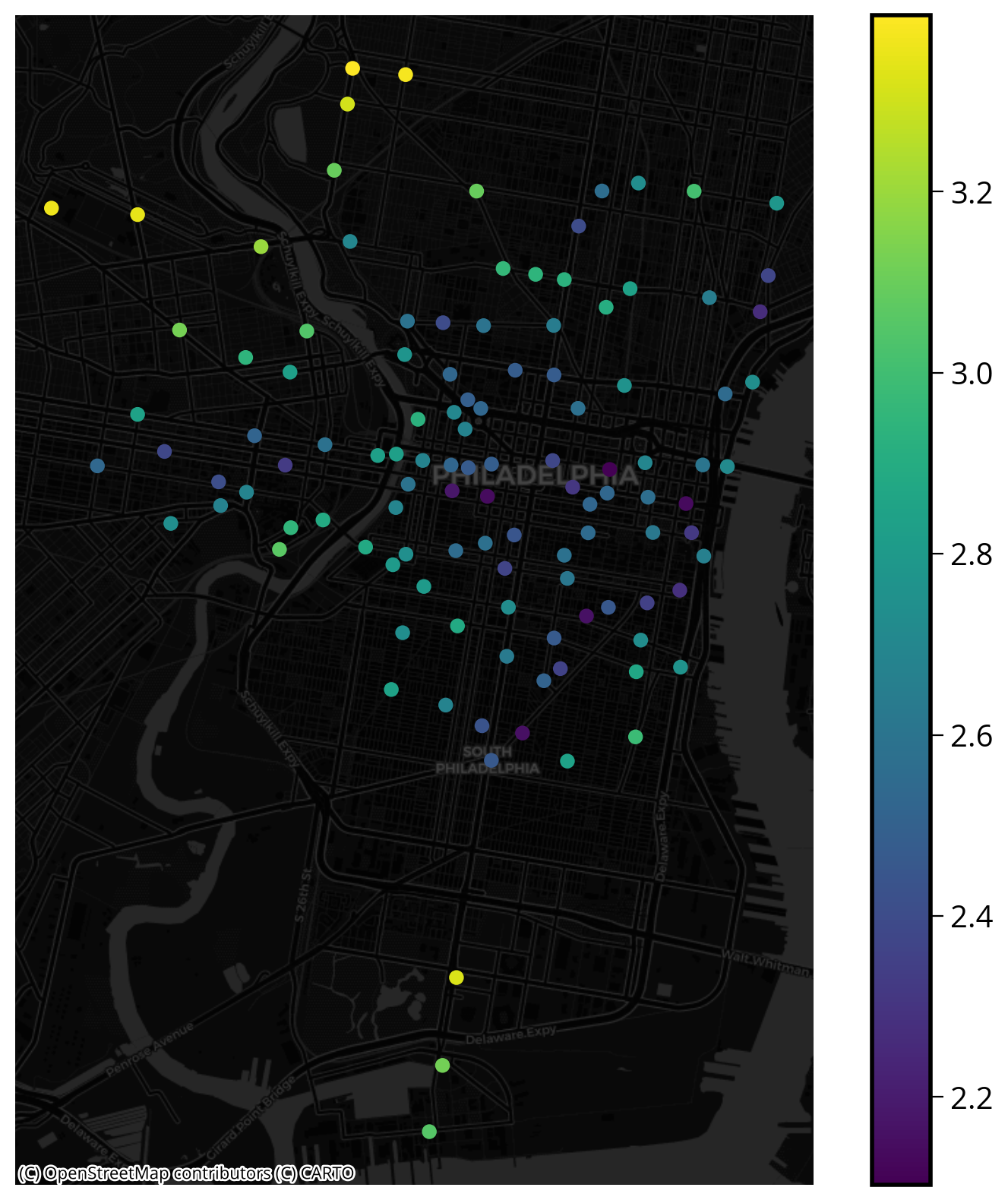

sales["logDistCars"] = np.log10(carDists.mean(axis=1))fig, ax = plt.subplots(figsize=(10, 10), facecolor=plt.get_cmap("viridis")(0))

x = salesXY[:, 0]

y = salesXY[:, 1]

ax.hexbin(x, y, C=np.log10(carDists.mean(axis=1)), gridsize=60)

# Plot the city limits

city_limits.plot(ax=ax, facecolor="none", edgecolor="white", linewidth=4)

ax.set_axis_off()

ax.set_aspect("equal")

ax.set_title(

"Distance to 5 Closest Abandoned Vehichle 311 Calls", fontsize=16, color="white"

);

Fit the updated model

# Numerical columns

num_cols = [

"total_livable_area",

"total_area",

"garage_spaces",

"fireplaces",

"number_of_bathrooms",

"number_of_bedrooms",

"number_stories",

"logDistGraffiti", # NEW

"logDistSubway", # NEW

"logDistUniv", # NEW

"logDistParks", # NEW

"logDistCityHall", # NEW

"logDistPermits", # NEW

"logDistAssaults", # NEW

"logDistCars" # NEW

]

# Categorical columns

cat_cols = ["exterior_condition", "zip_code"]# Set up the column transformer with two transformers

transformer = ColumnTransformer(

transformers=[

("num", StandardScaler(), num_cols),

("cat", OneHotEncoder(handle_unknown="ignore"), cat_cols),

]

)# Two steps in pipeline: preprocessor and then regressor

pipe = make_pipeline(

transformer, RandomForestRegressor(n_estimators=20, random_state=42)

)# Split the data 70/30

train_set, test_set = train_test_split(sales, test_size=0.3, random_state=42)

# the target labels

y_train = np.log(train_set["sale_price"])

y_test = np.log(test_set["sale_price"])# Fit the training set

pipe.fit(train_set, y_train);# What's the test score?

pipe.score(test_set, y_test)0.6160439816432102More improvement!

Feature importances:

# The one-hot step

ohe = transformer.named_transformers_["cat"]

# One column for each category type!

ohe_cols = ohe.get_feature_names_out()

# Full list of columns is numerical + one-hot

features = num_cols + list(ohe_cols)

# The regressor

regressor = pipe["randomforestregressor"]

# Create the dataframe with importances

importance = pd.DataFrame(

{"Feature": features, "Importance": regressor.feature_importances_}

)

# Sort importance in descending order and get the top

importance = importance.sort_values("Importance", ascending=False).iloc[:30]

# Plot

importance.hvplot.barh(

x="Feature", y="Importance", flip_yaxis=True, height=500

)That’s it!

Next week (last week!) we’ll talk about an advanced raster data use case.